Why batch?

For the sake of the discussion on this page and the next several pages, assume you have a program. The program has at least one input file and at least one output file.1

You can run this program on your own computer, like so:

Figure 109: Let’s start from here: You’ve got a program. You run it on your computer.

Eventually, there’s one thing that every program needs to have: more. In physics, the typical need is to process more data, which may in turn require more memory, larger disk files, more calculations, etc.

For now, let’s consider the need to process more data in the same amount of time. For example, if your program has to process ten times more events to generate a histogram, it’s going to take longer to run.

Why not just let the program run longer? Part of the answer is that a longer program becomes a more vulnerable program. I’ve seen programs that take weeks to run.2 What if something goes wrong during that time; e.g., a power outage on the computer; you close your laptop’s cover; someone else runs a job and crashes the computer. Another reason is impatience: Why wait days to run a program when there are methods to split up the calculations and run the process in minutes?

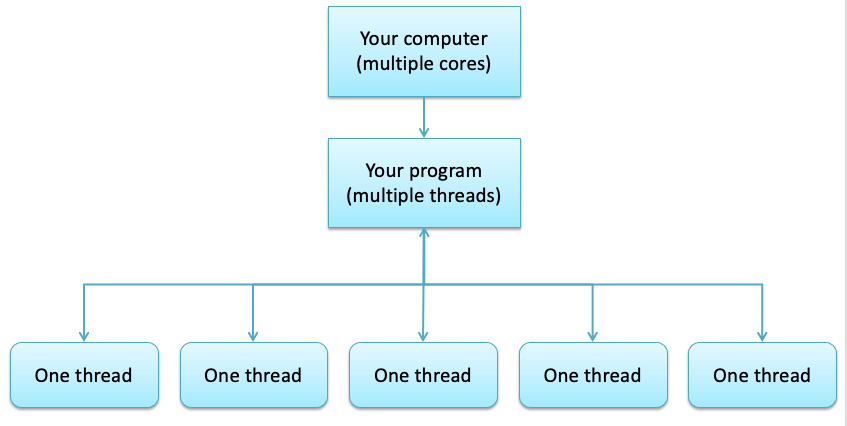

One way to approach this issue is to note that computers now come with more processing cores. It’s a rare laptop that doesn’t have at least four cores; the login systems at Nevis have at least 16 cores each (if not more); many systems on modern server farms have hundreds of cores.

What you can do is to re-write your program so it can take advantage of multiple cores, with each core representing one execution thread. Your program might then be viewed like this:

Figure 110: Multiple programming threads on a single computer.

However, writing a multi-threaded program is not easy. You have to consider whether your code is thread-safe, as opposed to making uncoordinated accesses to the same region of computer memory.3

Apart from that, the multi-thread approach works well if you’re satisfied with the number of cores on your computer. What you if you want more?4\(^{,}\)5

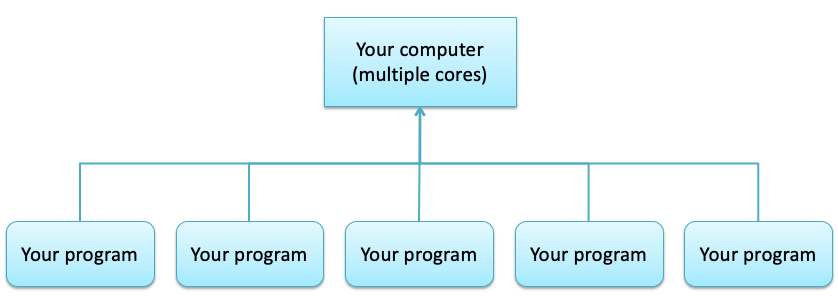

Let’s consider a different method: running your program more than once simultaneously on the same computer.

Figure 111: Multiple instances of the same program on the same computer. You could

manage this with the Linux at

command, but as

I note below, I don’t recommend this approach.

This avoids the threading issues. Each program is separate… and therefore requires a separate region of computer memory. That’s part of the “more”; this time it’s “more RAM.”

Let’s think about this for a moment: If you run multiple simultaneous copies of the same program, each one will produce an identical result. The usual way to handle this issue is to have the program accept a parameter of some kind, and make sure that each instance of the program receives a different value of that parameter. We’ll get into how that can be done in the Condor Tutorial.

Problem solved? Not quite. There’s nothing in this approach that keeps you from submitting any number of programs. What happens at some point is that you’ll overload your computer, because it’s swapping between one program and another, and probably running out of memory.6

While you could plan carefully and not run more programs than your computer has cores or memory, you’ve already learned that physicists want “more.”7 In particular, you probably want to just submit your program more than a dozen times and have the computer manage it somehow.

Figure 112: Managing multiple programs on the same computer, with the computer

controlling which ones are active. On a single computer, this can be

done with the Linux batch

command. Again, I don’t

recommend that you do this.8

This is a batch system: A process by which a computer monitors its resources and only executes a given job when there’s enough resources to run it.

This answers the question “Why batch?”: So you can schedule lots of programs at once without overloading your computer. However, so far we’ve been limited to using a single computer. In the next section, we’ll move to the next level: running your program on multiple computers at the same time.

- 1

If you’ve gone through the ROOT tutorial, of course you have a program!

- 2

If you think I’m joking, ask an astrophysicist or someone working with the GENIE neutrino simulation about “spline files”.

- 3

“Hey, don’t just say thread-safety is hard! Show it!” Okay, whatever you say.

Assume that your program is going to count something, as you did when you learned about applying a cut. That means that each of your threads might execute a line such as:

a = a + 1

Remember, each one of your execution threads is running simulteously and asynchronously (meaning the threads aren’t “talking” to each other).

Now consider what happens when you execute

a = a + 1. The computer takes the value stored inside the variablea. The computer then adds 1 to that value. Then the computer goes to store that new value into the memory location occupied by the variablea.Except that while one computer thread is doing this, another thread may be doing the same thing. What’s the value of

a? It’s the value that was stored by the last thread to execute that statement. In other words, the value ofahas become time-dependent; you’ll get different results depending on how fast each thread executes.“Isn’t there some way around this?” Of course there is! For example, you can define

ain such a way that each thread has their own copy ofa, then sum the copies ofaafter all the threads have finished. Or you can use a lock so that only one thread can executea = a + 1at any given instant.If you’re writing multi-threaded code, you have to think about these issues for every line of code that you write. That’s why it’s hard.

QED!

- 4

Asking “what if” here is redundant. Physicists always want more. Always.

- 5

In discussing threads in this way, I’m skipping over GPU cards. These contain thousands of processors, each of which can represent a separate thread. You may be familiar with this approach because it’s the basis of machine learning.

However, GPU cards are limited to those cases where each thread has a relatively simple and independent execution task (e.g., acting as a node in a neural network). If each of your threads has to calculate the relativistic stochastic and non-stochastic energy loss of a muon and the changes in its trajectory as it passes through liquid argon in a non-uniform magnetic field, then a GPU card may not be able to help.

Also, don’t forget the previous footnote: Even if your task can be accomplished by a GPU card, eventually you will want more. You’ll still want to consider batch job submission to a “farm” of dedicated computers, each with its own set of GPUs.

- 6

Now you know one reason you get the eternal “beach ball” or “hourglass” on your laptop… or why it can freeze entirely.

- 7

In this discussion, when I say “physicists want more,” it’s not exclusive. Other fields of study also want more resources. However, since I mostly hang out with physicists and not geologists or architects, I’ll only speak for the folks I know.

- 8

Why don’t I recommend that you do this? Most computers used in the sciences are shared. You can submit several dozen jobs using

batch, but what happens when another user on that computer does the same thing? Generally, one of two things: The computer slows down, which means it annoys everyone else in the working group; you become aware of all those other users (whose work, of course, is less important than yours by definition) whose programs are blocking yours from executing.Even if you manage to get a working development and execution environment on your own computer, you’ll find that using

batchmay eventually slow your system down.A solution, as we’ll see, is to distribute all these programs from multiple users onto other computers that no one uses interactively.