Using Condor

Let’s start with the main documentation:

From here, I’m going to become gradually more focused on the Nevis particle-physics procedure. It’s not because the our condor system is different in any special way. It’s the opposite: because Nevis is a relatively small site (its condor farms1 have roughly 300 queues), we use a “vanilla” version of condor.2 Sites with large farms of batch nodes, such as Fermilab and CERN, have special “wrapper” scripts around their condor job submission.

We have to start somewhere, and it might as well be with the Nevis particle-physics environment. This is a typical structure of a set of files you’d prepare for a condor job:

At the lowest level, the program(s) you want to execute.

For example, this might be a python program or a compiled C++ binary. It would be the same kind of program that you used to complete Exercise 11.

Above that, you’ll probably need a “shell script,” that is, a set of UNIX commands to set up the environment in which the programs will execute.

It may help to picture this: When you run your program interactively by using

sshto get to a remote server, you generally have to type in some commands before you can actually run a program. The shell script contains those commands.3As a simple example, in this tutorial we run programs that use ROOT. On the Nevis particle-physics systems, the command to set up ROOT is:

conda activate /usr/nevis/conda/root

So our script will contain that shell command (or whatever command sets up ROOT for your site).

At the highest level is the condor command file. This is the file that contains the commands to control what condor does with your program as it transfers it to a batch node.

For example, it’s this file that contains the ClassAds, in the form of a

requirementsstatement, to select the properties of the batch node.

Yes, it’s a file within a file within a file. Once you get the hang of it, it’s less complex than it sounds, especially if you start with an example. We’ll look at one in the next section.

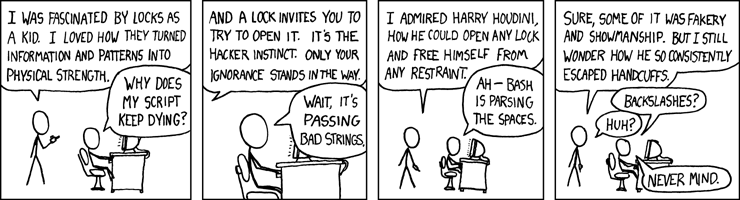

Figure 119: https://xkcd.com/234/ by Randall Munroe

- 1

I say “farms” because the Nevis particle-physics groups have three condor batch farms:

ATLAS Tier3 cluster

Neutrino cluster

a “general” cluster

The reasons for the separate farms are mostly historical, and have to do with dull things like moving the job to the data and funding issues (“Why is this other group’s job running on a system that our group paid for?”).

- 2

As you get to understand condor better, you’ll realize that this is a pun. There are several different execution environments in condor, and the default universe is called “vanilla”.

If you clicked on the link in the above paragraph to learn about condor universes, I’ll make things easy for you: Unless your group explicitly instructs you differently for your batch system, you’ll always want to use the vanilla universe.

- 3

There is an important exception to this. When you log in to a remote server, you may be typing commands like

cdto go to the directory that contains your program. As we’ll see in Resource Planning, in condor you cannot do this, because a batch node won’t have access to any of your directories. If your program needs any files to run, you must tell condor to transfer them to the batch node.